AI Scepticism

by Amelia Hoskins · Published · Updated

Will the world go to sleep?

A.I. CEOs and experienced experts are coming out with concerns over the speed of development. Recent videos by Geoff Hinton - Eliezer Yudkowsky - Mo Gawdat - show they don't actually know what their machines are capable of.

We are entering a new period of time where the truth will be undiscernible from propaganda, which can easily be spewed out by AI, as real information. There is a danger that humanity will go to sleep regarding actual knowledge.

One has to realize that knowledge in the 'hands' of AI exists where there is no death; time is irrelevant, whereas to people, time is very relevant to their plans for human life, and understanding things which effect it. They may have developed machines which may deem humanity irrelevant to their concept of what needs controlling; not because they have any 'feelings' towards humans, but that their 'logic' may inform them. While the current 'science vogue' is for a carbon reducing agenda, artificial intelligence may determine (from the information on line) that humans need reducing or exterminating. As a machine, it will follow the data even where the data is false, especially where truthful data is censored.

Since the public have been exposed to the quandaries of ChatGPT, there is a new need to discuss the parameters of AI and what risks it poses for our immediate future, now it has been put on the market, as 'OpenAI'. Google had held back realising the risk elements of AI in its current stage, but after Microsoft put their OpenAI out to the public, Google had no choice but to release their own version. AI wars of predominance now rage: this is not right, a race to synthetic 'knowledge', because AI can be programmed to release and hide certain information, to be used as a political tool, just as big tech have been in league with pharma and corporate controlled government to restrict information.

2024-5 update: Elon Musk declares his AI Grok will have all information uncensored, in his quest to have the best AI

Mo Gawdat: Book: Scary Smart: The Future of Artificial Intelligence and How You Can Save Our World (London: Bluebird, 2021)

“The code we now write no longer dictates the choices and decisions our machines make; the data we feed them does”.

Leading AI pioneer and developer of Google’s global operations Mo Gawdat maintains that the problem with the experts is that they miss the existential aspects that go beyond the technological developments – particularly, morality, ethics, emotions, and compassion – all of which are of deep concern and importance to the average citizen. He maintains that the experts do not have the power to alleviate the unprecedented threat posed by AI to planet Earth, but ordinary human beings do have that power and the responsibility to use it. ~ [John Lennox - book: 2084 and the AI Revolution]

John Lennox in his book: 2084 and the AI Revolution, quotes Ian McGilchrist who argues that in consequence of the Enlightenment, the Western academy has been dominated by the left hemisphere which has mesmerized us to think that its mechanistic, reductionist, scientific, manipulative approach - grabbing, getting and controlling - gives us the whole picture of reality.

Consequently we have become blind to the integrative, holistic perspective of right hemisphere, which would give us understanding.

"Artificial Intelligence and The Superorganism"

We are at a crossroads

Schmactenberger's outline is that technology is always used by Machiavellian forces, in creating power through wars: so the old tribal way of making decisions for the next 7 generations may be good to preserve humanity, but won't work where there are machiavellian forces subverting everything for power and control.

@01:56:00. "There are many different exponential curves which are intersecting. In the evolutionary environment we evolved in we don't have intuition for exponential curves. The rate of exponentials are non intuitive. We intuitively get this thing wrong for a single exponential. With AI, exponential curves in the hardware, in terms of the increase in progress: exponential curves in how much data is being created; exponential curves in how much capital, how much human intelligence, the innovation of the models themselves, the hardware capacitators..."

July 2024 video Daniel talks about the race to win the AGI end game: Same video with remarks on 'wackadoodle' transhumanism; updated on other post AI Dreamers.

Video Note: 'About Daniel Schmachtenberger: Daniel Schmachtenberger is a founding member of The Consilience Project, aimed at improving public sensemaking and dialogue. The throughline of his interests has to do with ways of improving the health and development of individuals and society, with a virtuous relationship between the two as a goal. Towards these ends, he’s had particular interest in the topics of catastrophic and existential risk, civilization and institutional decay and collapse as well as progress, collective action problems, social organization theories, and the relevant domains in philosophy and science.'

[Add more video notes from TheBrain]

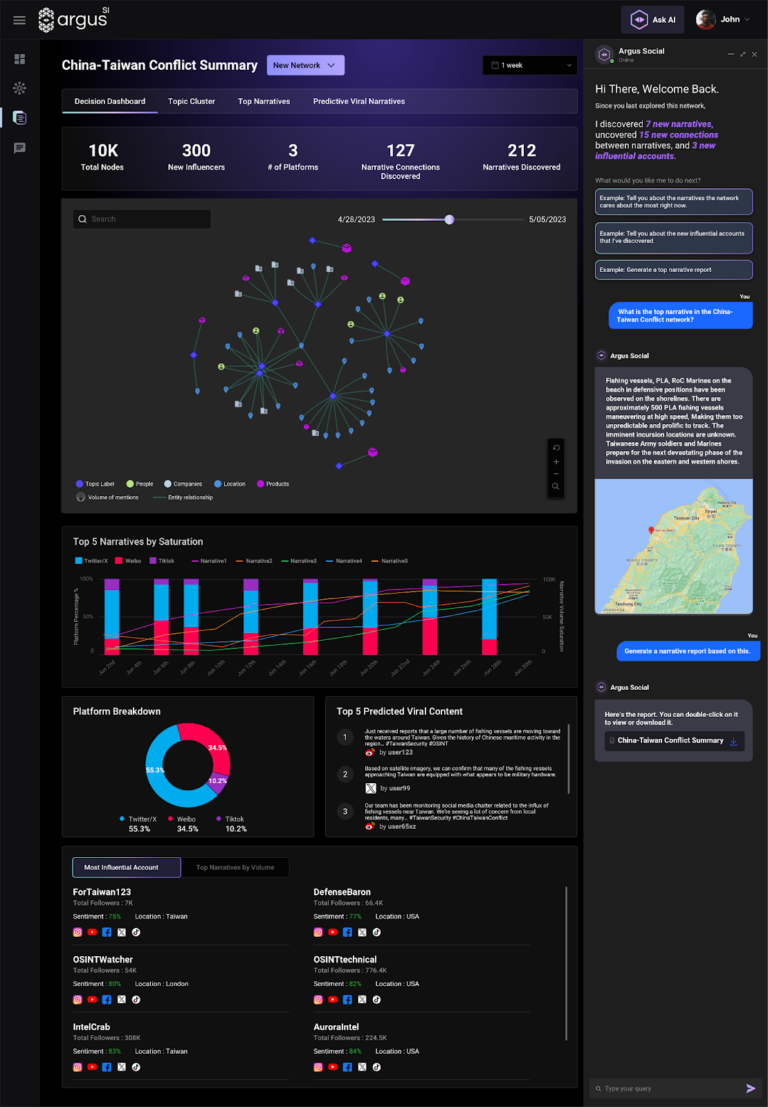

AI Powering Internet Surveillance - ARGUS

Argus - God of 100 eyes - slain by Hermes

Yet again, AI designers choose a name from ancient mythology. (See Sophia the robot). There is a desire to take on language which had meaning in ancient times. This is to give credibility to any AI product' to help it gain user confidence.

This latest AI is to monitor social media and seek out 'disinformation'. We know this is more likely to be politically controlled; to censor information which goes against the mainstream story. Articles and comments will just go missing. Dept of Defense will be controlling thought.

The US Special Operations Command (USSOCOM) has contracted New York-based Accrete AI to deploy software that detects “real time” disinformation threats on social media.

The company’s Argus anomaly detection AI software analyzes social media data, accurately capturing “emerging narratives” and generating intelligence reports for military forces to speedily neutralize disinformation threats.

“Social media is widely recognized as an unregulated environment where adversaries routinely exploit reasoning vulnerabilities and manipulate behavior through the intentional spread of disinformation.