Lavender AI Slaughter Bot

by Amelia Hoskins · Published · Updated

'LAVENDER' - Israel's AI killing guidance: another example of a word denoting something lovely, being used to name a killing machine.

Permitted deaths - For every 1 suspected Hamas targeted, IDF permitted 15-20 civilians to be killed in targeted attack. For higher ranking targets, 100 civilian collateral deaths were permitted. (including police, civil defence, and similar names). This is how AI RUNS AMOK! Now no one will feel individually responsible.

We've moved into the age where 'the machine did it'!!!

These people are INSANE. Thousands were attacked at night in their homes by auto system synically termed 'Where's Daddy?' Women and children were just killed indiscriminately along with male targets.

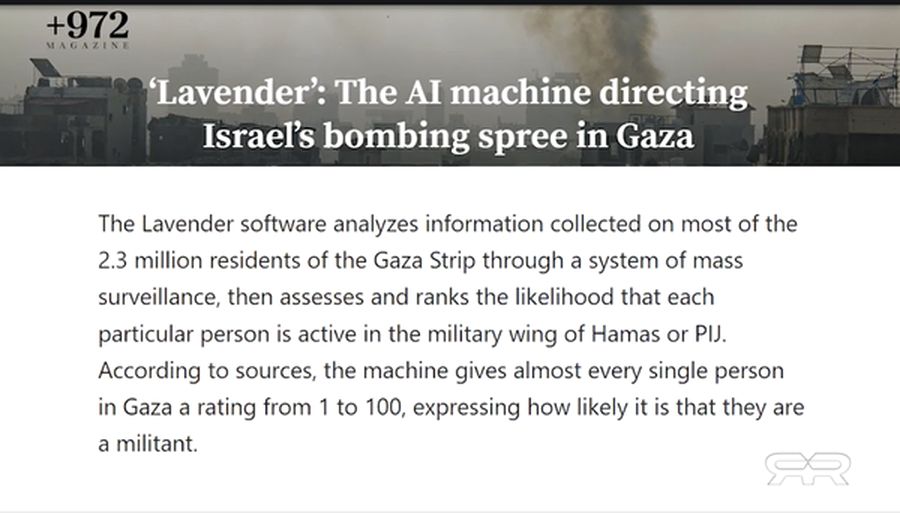

"During the first weeks of the war, the Lavender system designated about 37,000 Palestinians as targets and directed air strikes on their homes."

"The Lavender machine joins another AI system, “The Gospel,” about which information was revealed in a previous investigation by +972 and Local Call in November 2023, as well as in the Israeli military’s own publications. A fundamental difference between the two systems is in the definition of the target: whereas The Gospel marks buildings and structures that the army claims militants operate from, Lavender marks people — and puts them on a kill list."

A system of mass surveillance tracked Palestinians. Mass surveillance is coming fast to the Western world. Lets not imagine AI slaughter wouldn't come to any western country if some bad actor wished it!

To anyone seeing the vast areas of destruction in Gaza, via real time war broadcasting or media video, it was apparent some mass-killing directives were in use. Even the Guardian has reported on AI Lavender slaughter; as well as AlJazeera, which Harrison goes over in his WarRoom video.

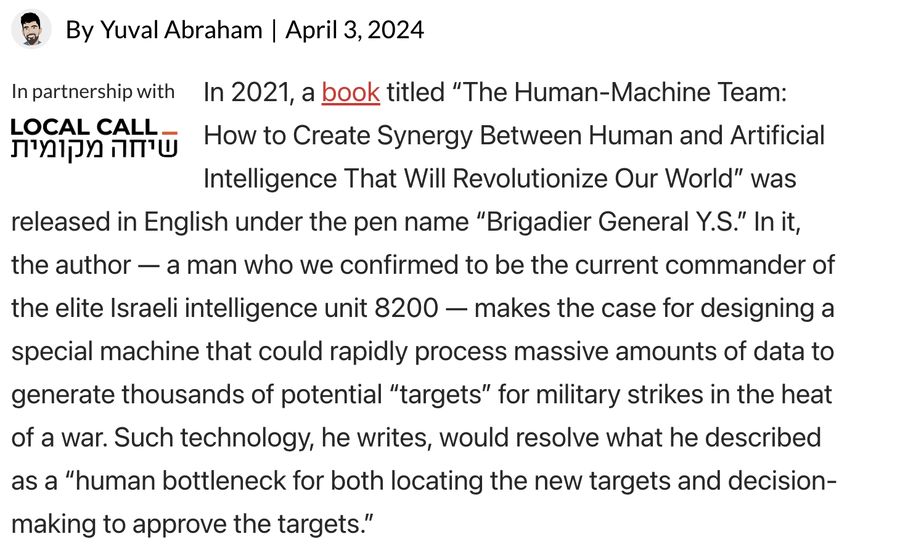

Book: THE HUMAN-MACHINE TEAM; HOW TO CREATE SYNERGY BETWEEN HUMAN AND ARTIFICIAL INTELLIGENCE THAT WILL REVOLUTIONIZE OUR WORLD - Brigadier Y.S.